This post discusses how developers at Intervals switched from using PDFLib in a single page load for PDF exports to wkhtmltopdf in multiple processes to allow for improved PDF appearance, reduced server load, and enhanced usability for the end user.

In this post…

The Search for a New PDF Library

One awesome feature of Intervals is that it contains export functionality for nearly every page in the application, including the ability to export to PDF.

When we first decided to do PDF exports, we chose to go with PDFLib, largely because of its extensive documentation, its large user base, and its reasonable price. It seemed like a good fit. Most list exports in Intervals pass through a single object, which formats them in a tabular manner, then outputs them as either HTML or CSV. So adding one additional output format for PDF through PDFLib was rather straightforward.

Over time, however, our enthusiasm for PDFLib started to wane. Because the capabilities of the PDF specification and the PDFLib library were fundamentally different from HTML, the appearance of our PDF exports began to diverge from that of our web application. Frankly, the exports were ugly.

Pictured above, the PDF export on top, the web application on the bottom. As you can see, they were quite different in appearance.

Furthermore, Intervals users who tried to export large reports often had mixed success. Reports longer than 150 pages would time out. We had to tell users to export smaller reports, and this simply wasn’t acceptable. Not only that, large reports often produced large CPU spikes that would slow down the rest of the system and/or trigger server warnings.

Towards the end of last year, we began an initiative to replace the PDF engine behind the export with the following goals in mind:

- increased PDF generation speed and efficiency

- do away with any upper limits on how large a report a user could produce

- cut down on visual differences between PDF exports and web application

- improve the look of the PDFs

The First Contender: TCPDF

We began our search for a suitable replacement with one primary criteria: it had to convert HTML to a PDF. This would satisfy the last two goals in our list and would simplify code by ensuring that as long as we kept everything on the web app side looking correct, that would be reflected in the PDF exports. The first product we looked at was TCPDF. It could convert HTML into PDFs, and it was PHP-based. This was great. This meant all we had to do was create our output in HTML and then pass it through TCPDF.

Conversion was relatively straightforward. We merely unplugged PDFLib and replaced it with the new library. The PDFs themselves looked glorious; near-perfect representations of what we had on the screen (which we expected, since they were both sourced from the same HTML).

Unfortunately, we immediately ran into a huge hurdle. The time it took to convert a HTML page to a PDF export actually increased. This wouldn’t have been such a bad thing, except that the increases in time to export increased exponentially with the size of the table being output. Large exports bumped up against our execution time limit. And to make matters worse, really large exports bumped up against our PHP memory limit. We loved the way our PDF exports looked, but it was starting to look like this switch could potentially create more drawbacks than benefits.

We decided to tackle these issues one at a time, starting with the timeout issues.

Deferred Process Execution

In order to deal with our timeout issues, we concluded that we had to find some way to let PDF creation take longer than the standard maximum time PHP processes are allotted, and we had to do so in a way that wouldn’t turn away users. We first explored the possibility of making PDF creation a background job. Perhaps we could store HTML output in a queue and email the users with links to the created PDFs (or the actual files themselves after the job was completed). We decided it would be too annoying to users. It takes them out of the application, and might frustrate them if they had a hard time finding the email or the link to the created PDF. Impatient users might unnecessarily hammer the servers trying to create PDFs repeatedly.

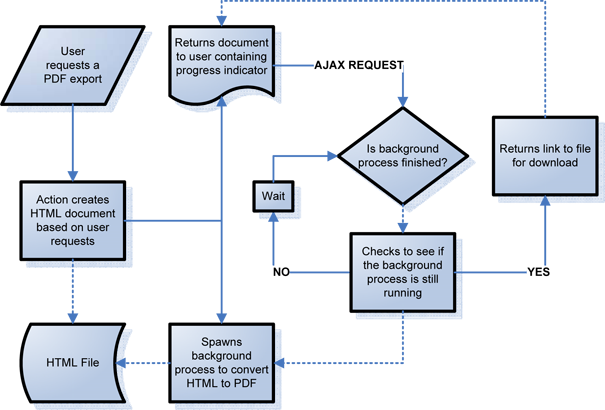

We concluded we would have to come up with a way to process PDFs immediately, but in a background process. We’d have to give the users some sort of progress indicator to let them know that PDF generation was working, and hadn’t failed. And we’d have to give that process a lower priority so that the servers wouldn’t get overwhelmed. We put our heads together, bounced around ideas, and came up with the following:

- When the user requests a PDF export, we’re going to send her to a new page for generating PDFs. On that page load, the action will create the HTML that will eventually be turned into a PDF, and save it to a temporary file on the server.

- Once the HTML file has been created, the action will create a PHP background process. That background process will pull in the HTML from the file and begin running it through TCPDF.

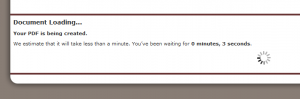

- Based on the size of the HTML created, we’ll create an estimate of how long the background process ought to take, and we’ll display that to the user in the form of the progress indicator. On the user’s page, we’ll create a periodical ajax call in Javascript to a third script to check the progress of the PDF execution.

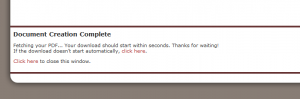

- The progress script will report whether the background PDF creation process is still running or not. If it’s running, we’ll return that to the user’s page to continue waiting. If the process is not running, we’ll check the created file for markers of a successful PDF creation; if the PDF has been created successfully, we’ll send a download link to the user’s page so they can download the file.

Pictured above, the process by which the user interacts with the system during a request for a PDF export.

Here are some essential PHP snippets to do this.

To spawn the background process:

$pID = shell_exec("nohup nice -n +15 php /pdfdir/pdfgen.php $temp_file > /dev/null 2> /dev/null & echo $!");

The process is actually created through the command line. This requires the installation of the php-cli package. This is what gets around the execution time limit, as it doesn’t pass through the web server. nohup tells the command to ignore the hangup signal. Normally shell_exec() has to wait until the command has completed in order to continue execution of the PHP script; nohup allows the PHP background process to execute without requiring the processes that spawns it to wait. > /dev/null and 2> /dev/null tell the command to send all output (STDOUT and STDERR) to /dev/null. Without these, some OSes may prevent shell_exec() from continuing until the spawned process has completed. nice -n +15 simply sets the background process’ priority to very low. Finally, & echo $! returns the OS process ID; we will use this in the progress script later to see if the process is still running.

Checking to see if the background process is currently running:

exec("ps $pID", $ProcessState);

$isRunning = (count($ProcessState) >= 2);

We use exec() here rather than shell_exec() because we want to capture the output.

The Results, First Pass

Built out, the results were promising. The progress indicator worked fairly accurately, the load on the system wasn’t too great, and the users got their PDFs delivered quickly (and best of all, they looked good). But our testing quickly turned up a major flaw: for the larger PDFs (150+ pages) we were still hitting our PHP memory limit. The background PHP script would fail fatally, and we’d be forced to tell the user that their PDF couldn’t be generated, and to try a smaller date range or a more limited list of items.

This was unacceptable. We knew PHP in general and TCPDF in particular were the main liabilities in the equation here. PHP can have a high memory overhead versus compiled languages, and TCPDF’s codebase was huge; we didn’t want to have to modify it to support our needs.

The Second Contender: wkhtmltopdf

During our trials, one solution kept popping up. wkhtmltopdf was a tiny shell application for converting HTML code into PDF. It was written in C, which meant we wouldn’t have to deal with memory limits. Even better, it used the WebKit HTML rendering engine, the same engine used by Safari and Chrome, meaning that we could count on the HTML to be displayed accurately exactly as we intended it.

Because code was already in place to allow deferred processing of PDF exports, swapping out the small part that converted the HTML to PDF was easy. And much to our delight, the performance gains were massive. We no longer hit a memory ceiling. We also saw dramatically improvements in speed.

Analysis

We’d hit all our goals: the PDFs now looked much better, users could export huge reports without timing out or encountering fatal errors, and our servers got a break. In total, all development took about 80 developer-hours, which is honestly much less time than it took to adapt Intervals to use PDFLib initially.

Thank you! We are having the same problem… I’m gonna use your solution.